Popularity-bias Deconfounding (PD) - Estimation

Estimation method

Last time, we have identified the followiing causal effect.

To estimate the statistical estimand on the RHS, we first estimate

It remains to show how to parameterize

Lastly, since we are only interested in the rank of items, we do not need to normalize the estimation to make it a rigorous probability.

Now we move forward to estimate

Notice that

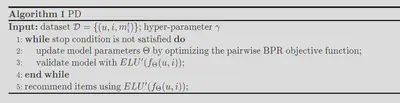

To summarize, we fit the historical interaction data with

Datasets

In this article, experiments are conducted on three datasets. We summarize them in the following table.

| dataset | output type | size |

|---|---|---|

| Kwai | clicking | 7,658,510 interactions between 37,663 users and 128,879 items |

| Douban Movie | rating | 7,174,218 interactions between 47,890 users and 26,047 items |

| Tencent | likes | 1,816,046 interactions between 80,339 users and 27,070 items |

From the table above, we can see that although we call

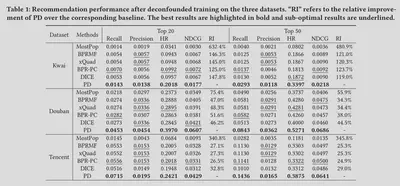

Performance

The proposed method (PD) are compared with a bunch of baseline methods, from heuristic methods like MostPop and MostRecent, to methods withour popularity adjustment such as BPRMF and state-of-art methods to handle popularity bias such as BPR-PC and DICE and so on. The propsed PD consistently outperforms all the baselines on all datasets. The degree of improvement depends on popularity properties of the datasets. Higher popularity drifts imply that item popularity

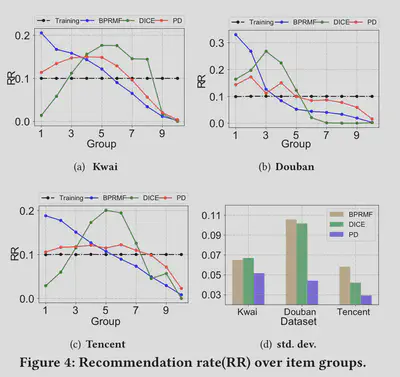

A recommendation analysis is conducted on some of these methods to show how much the recommendation favors popular items. In order to compare, they first design a metric called Recommendation Rate (RR). First, they sort the items in the recommendation list according to their popularity in descending order. Then, cut the sorted list into 10 pieces (groups) so that each group shares the same total popularity (the sum of popularity over all items). Now, we can define the RR of group

As the figure above shows, although still going down, PD exhibits lines that are flatter than other methods, which means it performs better at eiliminating the effect of popularity when recommending items.