Popularity-bias Deconfounding (PD) - Sensitivity analysis

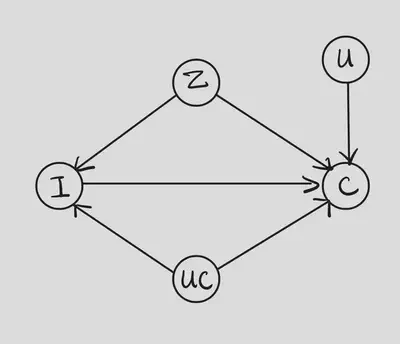

In the original article 1, the authors focus on the method for identification and estimation, while do not put much effort in discussing the assumptions. There is no sensitivity analysis part in the article. However, the authors do make some important assumptions that worth discussion. One of those assumptions is that the populairty(

Recall that the causal estimand we are interested in is

Manski’s Method

Since we have conditioned on

Hence, we can only work on the no assumptions case. We still need assume

Therefore,

where

These bounds do not contain

Although we obtain the bound above, it probably too loose to draw some satisfying conclusion, which is a cost of the generality of Manski’s method.

Other methods

We can also resort to Rosenbaum’s and VanderWeele’s methods for sensitivity analysis. However, the methods we learned in class are all for binary intervention. I am not sure how to general them into multi-level case.

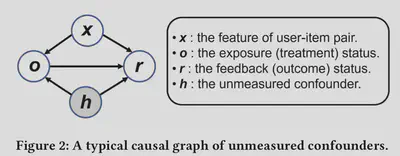

There is a work 2 on recommender system which provides sensitivity analysis. However, they work on a different causal graph where the intervention (the exposure status) is still binary. Figure 2 shows this graph.

Their method is also different. First, they show exsiting propensity-based methods, such as IPS estimator and DR estimator, are biased in the presence of unmeasured confounders. Then, they proposed a robust deconfounder framework based on the idea of sensitivity analysis on propensity score model. Specificaaly, they use gamma sensitivity in Rosenbaum’s method.

In short, there exists some work apply sensitivity ananlysis in the context of recommender system, but they are restricted and fail to work in the setting we discuss. It may be still an open problem that how to perform appropriate sensitivity analysis in our setting.